Advanced Security Scanning: How Protect AI Platform and Semgrep Code Deliver AI-Enhanced Defence for Modern DevOps

73% of AI-Powered DevOps Environments Are Blind to Their Own Vulnerabilities—Is Yours One of Them?

The last thing you want is your AI “genius” quietly handing out firewall keys like party favours. Yet traditional scanners routinely mistake large language models for inscrutable black boxes—and fail to spot lurking threats inside. Welcome to 2025, where AI doesn’t just change software development; it weaponises complexity that shoots down your old-school security tools without mercy.

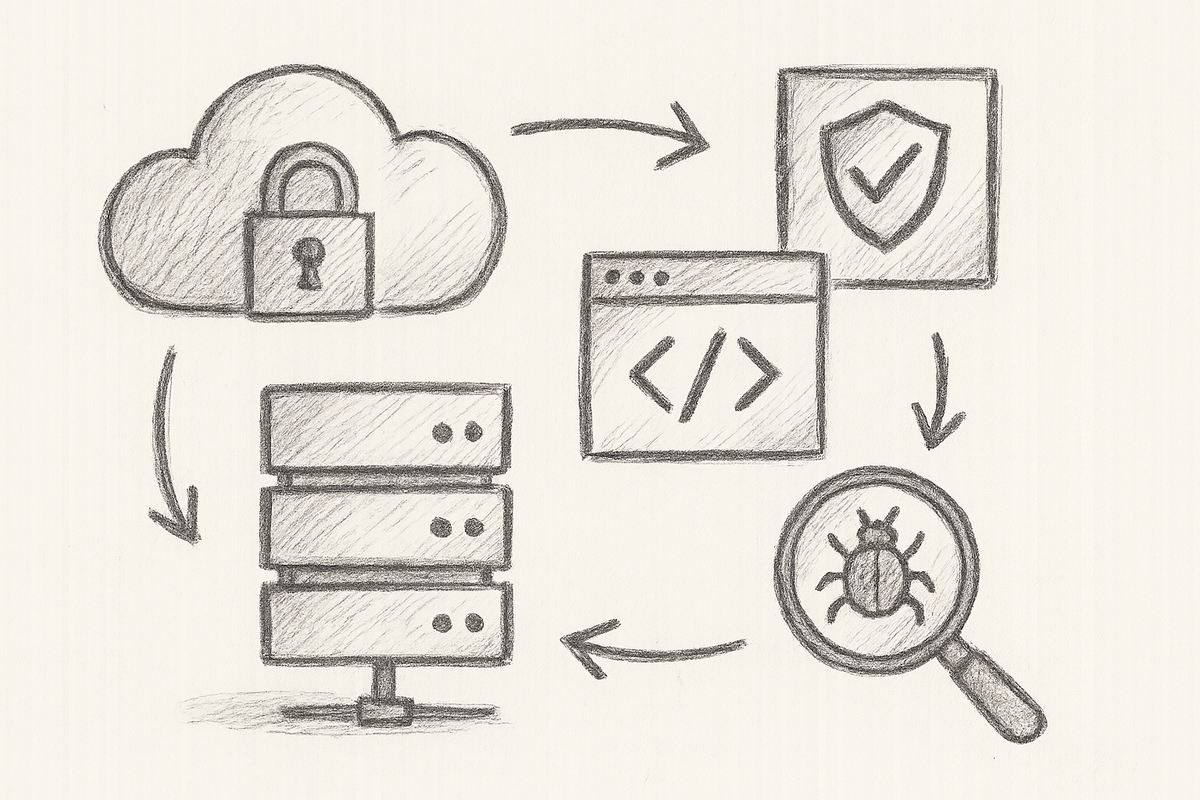

The AI Security Challenge for DevOps: More Than Just a New Name for Old Risks

Over the years, I’ve wrestled with fires of every kind in production. But AI-related incidents? They’re like a shape-shifting phantom—fast, stealthy, and downright inscrutable. Conventional scanners choke on AI models—especially LLMs—because these aren’t static lines of code; they’re living algorithms split between data, weights, and behaviour.

Here’s the rub:

- Securing the AI model itself requires more than scanning static code. It means guarding training data from poisoning, cracking prompt injection, and spotting adversarial payloads—threats invisible to legacy scanners.

- A sprawling multi-language DevOps ecosystem — with Python in AI pipelines, Go for back-ends, JavaScript on the front-end and Terraform stitching infrastructure — blows traditional static analysis out of the water. False positives flood your channels while true vulnerabilities lurk silently.

This is why Protect AI Platform and Semgrep Code have become indispensable allies. Protect AI shields the AI lifecycle—from data integrity all the way to runtime anomaly detection—scanning with AI smarts. Semgrep, meanwhile, delivers laser-precise static analysis across 30+ languages with custom AI-augmented rules tailored for modern polyglot code and AI quirks.

I’ll take you through the brutal pain points, surprising “aha” moments, and the blueprint to tame the AI security chaos without losing your sanity.

For a comprehensive look at how AI innovations are transforming DevSecOps, check out AI-Powered DevSecOps: How Aikido Security, Snyk DeepCode AI, and Google Big Sleep Slash Vulnerability Remediation Time.

Why Your Traditional Security Scanners Are Essentially Useless in AI DevOps

Let’s cut to the chase: legacy scanners are relics of an era where apps were monolithic, binaries were static, and attack surfaces fixed. Enter AI models, especially LLMs trained live on dynamic data streams, and these tools flail helplessly.

Consider the infamous CVE-2024-36715. This code-injection flaw slipped past scanners because the tools simply didn’t parse injected model scripts inside AI microservices. Wait, what? A glaring vulnerability evaded detection by your “trusted” scanner because it didn’t know what to look for in AI-generated code?

Layer in sprawling codebases spanning Python, Go, Javascript, and Terraform, and your old scanners are either overwhelmed or utterly blind. The result? Alert avalanches so loud your team burns out chasing ghosts while actual threats roam free.

One engineering team I supported confessed their “security pipeline” stretched to 25 minutes per commit check — a joke in any fast-paced GitOps world.

And here’s the kicker: AI-specific threats like prompt injections or adversarial payloads don’t register on traditional scanners’ radars at all. It’s like having a smoke detector that ignores electrical fires.

Meet Protect AI Platform: The Swiss Army Knife for AI Model Protection

Imagine a solution designed to secure AI models end-to-end—from data ingestion to model runtime behaviour. That’s Protect AI Platform in a nutshell.

Its standout features include:

- LLM-Specific Threat Detection: Think of it as a bouncer for your AI models, spotting prompt injection attempts and twisted adversarial inputs aiming to confuse or hijack your system.

- Data Integrity Assurance: Cryptographic provenance checks to catch data poisoning before it poisons your entire AI brain.

- Runtime Anomaly Monitoring: AI-driven detectors prowling your production models, ready to trigger alarms before chaos erupts.

- CI/CD Integration That Doesn’t Ruin Developer Happiness: Plug-n-play Kubernetes pipelines that enforce policies pre-deployment with minimal friction. (Kubernetes Security Best Practices (CNCF))

Here’s a declarative Kubernetes integration snippet to get your feet wet:

apiVersion: security.protectai.io/v1

kind: ModelScanPolicy

metadata:

name: ai-security-policy

spec:

triggers:

- event: pre-deployment # Run scans before deployment

rules:

- detectPromptInjection: true # Detect AI prompt injections

- enforceDataProvenance: true # Validate data integrity provenance

alertLevel: critical # Set alert severity

Tip: Monitor logs for scanning errors and have rollback options ready if scans block critical deployments unexpectedly.

Combine this with rich dashboards, API-driven alerts, and even automatic remediation for a platform that truly belongs in the DevOps trenches.

See also Protect AI Platform (conceptual) – Azure AI Model Security Framework.

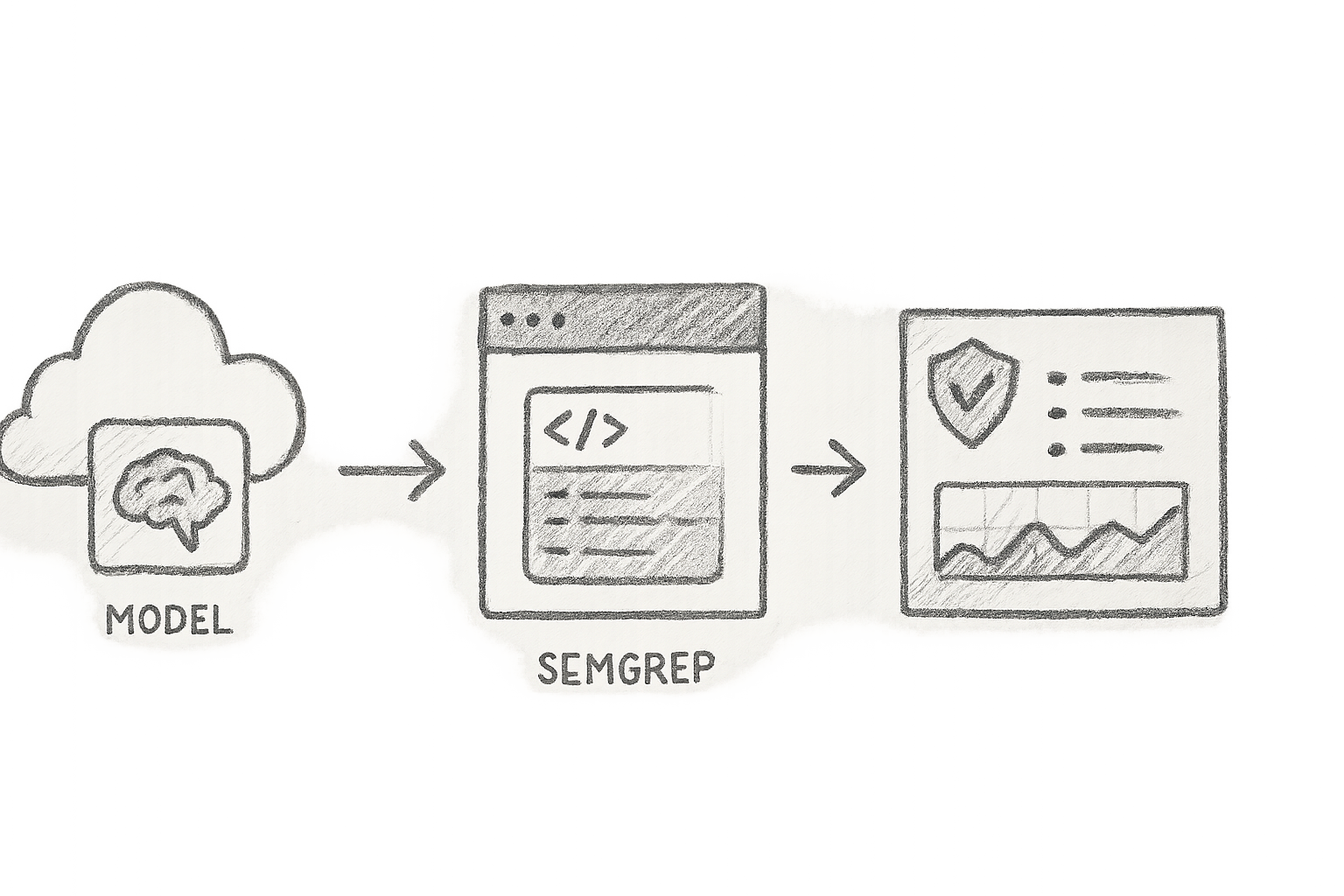

Semgrep Code: Static Analysis on Steroids for Polyglot AI Codebases

If legacy scanners drown you in noise, Semgrep swoops in like a saviour. It fuses traditional pattern matching with AI-powered contextual awareness to slash false positives by up to 98% (Semgrep 2025 Summer Release).

Supporting 30+ languages, whether you’re wrangling Python AI pipelines or Terraform infrastructure as code, Semgrep has got you covered.

The pièce de résistance? Semgrep Assistant: an AI buddy that triages alerts, spits out fix snippets, and can even auto-generate bespoke rules based on your project quirks.

Check this custom rule that flagged unsafe machine learning model loading in Python:

rules:

- id: insecure-ml-model-loading

patterns:

- pattern: |

model = torch.load($PATH, map_location='cpu') # Loading ML model without validation

message: "Loading ML model without validation can risk injecting malicious payloads."

languages: [python]

severity: ERROR

Security note: Always validate and sanitise ML models loaded dynamically to prevent payload injection attacks.

Integration options cover CLI, GitHub Actions, Jenkins, or GitLab CI. From my own experience, scans typically juggle multi-million-line repos in under 10 seconds — a stark contrast to the legacy snail pace.

Official docs sing its praises:

“Semgrep performs dataflow analysis to reduce false positives by up to 98%, while AI triage further filters noise, so security teams only focus on true issues.”

(See Semgrep Official Documentation)

How I Integrated Both Platforms: Lessons from the Trenches

I’ve got battle scars from setting this up myself. Here’s the distilled plan you want, or risk turning your team into alert slaves:

- Deploy Protect AI agents in your AI workload namespaces on Kubernetes via helm chart or operator. Tune your scan policies carefully—the first few days can be a noisy mess.

- Set up Semgrep scans as pre-commit hooks or CI jobs. Start with curated rules, then layer AI-augmented custom rules for your AI handling code. Don’t block merges unless issues are critical—trust me, pipeline velocity is gold.

- Automate ticket creation: on Semgrep failures, create Jira issues with AI Assistant’s fix suggestions. On Protect AI alerts, trigger runbook workflows to notify Site Reliability Engineers with precise anomaly insights.

- Handle errors and scale smartly: cache Semgrep results for unchanged code to speed scans; throttle Protect AI’s resource usage at peak times.

- Monitor effectiveness: dashboards combining telemetry from both tools reveal alert volumes, response times, false positive trends. Adjust as needed.

Here’s a snippet to run Semgrep with AI Assistant on GitHub Actions:

jobs:

semgrep_scan:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Semgrep Scan

uses: returntocorp/semgrep-action@v1

with:

config: "p/ci" # Use CI preset rules

github_token: ${{ secrets.GITHUB_TOKEN }}

output: sarif # Enable SARIF output for tooling integration

(For the full drill on operational complexity and teamwork, see Open-Source Developer Platforms Unleashed: How Backstage, Gitea, and Kraken CI Solve DevOps Collaboration Nightmares with Production-Grade Implementation.)

The Aha Moment: More Scanning ≠ More Security

Here’s a nugget you won’t hear at the watercooler: dumping every scanner you can find into your pipeline is a spectacularly bad idea. More scanners mean more alerts, more chaos, and paradoxically—less security.

One slow-motion trainwreck I witnessed involved triage paralysis: it took weeks to identify a single true positive buried inside a sea of 10,000 false alarms. A security team’s worst nightmare.

The future is about context-aware AI-augmented scanning that prioritises risk, automates triage, and blends static code checks with runtime behavioural analytics. The mantra? Not “Scan everything, fix everything,” but rather “Scan smartly, fix swiftly.”

What’s Next in AI-Driven Security? Buckle Up

The horizon is both exhilarating and terrifying:

- Generative AI assistants that synthesise vulnerability patches and test cases on demand. (Yes, your code reviewer might soon be a bot.)

- Enhanced runtime observability for federated AI systems, with ethical guardrails deeply embedded.

- APIs that feed AI security scanning results directly into DevSecOps workflows, enabling faster, smarter incident responses.

- A convergence of AI security and software supply chain transparency to tackle dependency risks head-on.

The pace of innovation means you’ll either ride the tsunami or get wiped out by it.

Conclusion: Ditch the Butter Knife — Arm Yourself with AI-Augmented Defence

Launch small, but launch smart. Deploy Semgrep on your highest-risk repositories with AI triage enabled, pilot Protect AI on Kubernetes AI pipelines, measure incident reduction and fix times closely. Evolve your policies iteratively.

Clinging to old scanners is like bringing a butter knife to a gunfight. Embrace AI-augmented tools—or prepare to be memed into infamy by the next major outage.

References

- Semgrep Official Documentation

- Protect AI Platform (conceptual) – Azure AI Model Security Framework

- CVE-2024-36715: NVD Detail

- Semgrep 2025 Summer Release Blog

- Kubernetes Security Best Practices (CNCF)

- OWASP Top 10 2024

Author’s note: If surviving the AI security circus is your goal, better arm yourself with the right weapons—and a sixth sense for dumping the dead weight tools.

Cheers, and may your models stay robust and your alert noise mercifully quiet.