AI-Powered DevSecOps: How Aikido Security, Snyk DeepCode AI, and Google Big Sleep Slash Vulnerability Remediation Time

Why Are Vulnerabilities Lurking for Over 200 Days—And Who’s Responsible?

In 2024, organisations stumbled helplessly as high-severity vulnerabilities lingered for an alarming median of around 11 to 26 days according to recent industry research. Yet, the tools we trusted to keep us safe behaved like drunk party-goers, dumping alerts like confetti with zero context or prioritisation. The result? Alert fatigue that turned security teams into unintentional spectators. Anyone else feel that pit in the stomach when a new vulnerability pops up and you think, “Great, now what?”

This isn’t just another tale of alarm bells ringing in a vacuum. Traditional vulnerability management has become a quagmire of noise and lost opportunities, creating immense operational drag. The game-changer? AI-powered DevSecOps. Far from being a next-gen buzzword, this tech is the existential survival kit in today’s relentless threat environment.

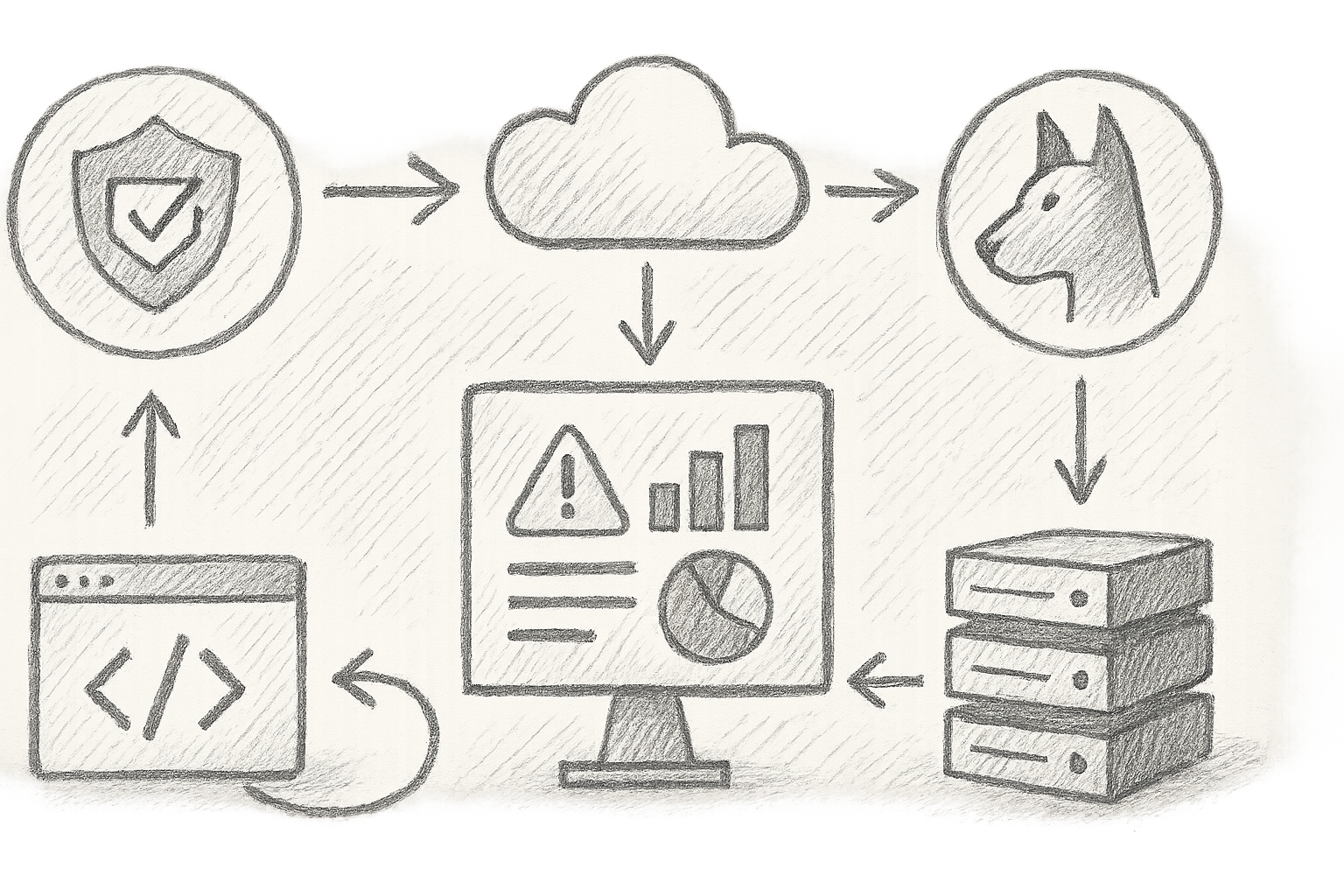

Join me as I dive into three AI juggernauts reshaping vulnerability management: Aikido Security, Snyk DeepCode AI, and Google Big Sleep—platforms slashing remediation times, automating toil, and hunting invisible threats with ruthless precision. Hold on tight, because what you’ve traditionally relied on is about to feel like amateur hour.

Why Traditional Vulnerability Tools Are Failing Miserably (And It’s Not Your Team’s Fault)

Let’s be blunt: If your vulnerability scanner’s alert volume spikes into the thousands weekly, you’re no longer managing security—you’re babysitting noise. Imagine being part of a fintech team where the scanner spat out 3,000 alerts in one dreadful week. The engines behind remediation screeched to a halt, overwhelmed by the avalanche. Context? Nada. Prioritisation? Non-existent. What vulnerable assets really mattered? Who knew? Weeks went by without a single patch deployed, and I can confirm…the criminals noticed.

Here’s the kicker most teams don’t talk about. Traditional tools snag only the known knowns. Zero-day-like polymorphic exploits continue to lurk in the shadows, protected by the blind spots of signature-based detection. You’re almost setting out a velvet rope for attackers you can’t see. Industry breaches repeatedly trace back to these ‘unknown unknown’ vulnerabilities, silently exploited until the damage is done.

Isn’t it about time vulnerability management grew up?

Enter Aikido Security: The Automated Fix-Generator That Won’t Let You Down (All The Time)

What Makes Aikido Different?

Aikido Security aims to crush the remedial drag by automating everything—from detection through to pull requests for fixes. We're talking about a pipeline so smooth it practically brews your morning coffee. It detects vulnerabilities across codebases, containers, cloud workloads—and then writes and tests fixes automatically. “Wait, what?” Yep, no manual handholding for low-hanging fixes.

Putting Aikido to Work

Plug it into your CI/CD pipeline with a few lines of config (see code snippet below). It scans your repositories before merging, then generates fix PRs automatically. Of course, it gracefully handles failures with detailed exit codes, so your automation doesn’t faceplant after a hiccup.

version: 2.1

jobs:

aikido_scan:

docker:

- image: aikido/security-cli:latest # Use latest stable CLI image from Aikido repo

steps:

- checkout

- run:

name: Run Aikido vulnerability scan

command: aikido scan --repo . --output results.json

- run:

name: Auto-generate fix PRs

command: aikido autofix --results results.json --create-pr

# Note: Ensure commit user permissions for PR creation in your CI environment

workflows:

version: 2

scan_and_fix:

jobs:

- aikido_scan

Expected output: Scan results logged into results.json, automated PRs for fixes if applicable.

Troubleshooting tip: Check exit codes and CI logs if automation halts unexpectedly.

My Experience: The Fivefold Speed-Up

I recall implementing Aikido in an npm-heavy environment where patching lagged dreadfully. Within weeks, the turnaround plummeted from days to hours. Developers stopped grumbling about mundane patch scripting and focused on high-value features instead. That morale boost? Priceless.

Caveats

Heads up—automatic fixes aren’t panaceas. Some fixes inevitably fail your tests or don’t consider intricate context, demanding manual intervention. Integration complexity can pile up in diverse language environments, so expect some homework.

For full details, see Aikido Security's official documentation.

Snyk DeepCode AI: Code Analysis That Actually Explains It—With Fixes

What’s the Big Deal?

Forget static scanners screaming “vulnerability!” with no clue how to fix it. Snyk DeepCode AI uses machine learning to scan code repositories and suggest developer-friendly fixes straight inside your PR reviews. This isn’t just scanning; it’s an apprenticeship on secure coding.

Easy Integration That Developers Love

Embed it in pre-commit hooks, CI pipelines, or IDEs. Developers get immediate feedback, and the fixes suggested are actionable.

Example pre-commit hook:

#!/bin/sh

# Run Snyk code test before each commit; output JSON report.

snyk code test --json > snyk_report.json || {

echo "Security issues detected. Refer to snyk_report.json"

exit 1 # Block commit on issues detected

}

YAML config allows fine-tuning rules to reduce noise or enforce priorities:

snyk:

code:

rules:

exclude:

- "low-severity" # Exclude low severity alerts from reports

custom_fix_templates: true # Enable AI-powered fix suggestion templates

Precision and Pitfalls

- Pros: High precision substantially lowers triage load.

- Cons: False positives crop up in complicated legacy code, meaning some tuning is inevitable.

Developers who embraced this tool shifted from firefighting to proactive fixing—a candid example of AI reshaping security culture.

More info at Snyk Code official page.

Google Big Sleep: Hunting the Unknown Unknowns with Autonomous AI

Zero-Day Hunting Goes Autonomous

Imagine an AI agent so cunning it explores software like a curious-but-ruthless toddler tearing apart a toy to find weaknesses. That’s Google Big Sleep—an experimental, AI-driven fuzzing and anomaly detection system designed for autonomous discovery of unknown vulnerabilities before criminals even dream them up.

Complex Architecture Under the Hood

Big Sleep combines:

- Reinforcement learning-powered fuzzing engines

- Behavioural anomaly detectors spotting odd runtime patterns

- Automated triage pipelines generating actionable vulnerability reports

Details remain sparse due to Google’s proprietary approach, but open-source tools with ML-enhanced fuzzing heuristics (e.g., ClusterFuzz) provide glimpses of similar capabilities.

# Hypothetical usage example:

big_sleep --target chrome --duration 72h --output findings.json

# Note: Availability limited; approach mainly illustrative

Integrating Findings into DevSecOps

Output reports feed into issue trackers like Jira or GitHub, with severity prioritisation, enabling efficient triage of unknown risks.

Reality Check

- High compute demands make this impractical for smaller teams.

- Parameter tuning is challenging—too many false positives can overwhelm.

- Tooling and workflows are immature, so expect bumps in adoption.

Still, fully automated zero-day vulnerability discovery and patching feels tantalisingly close.

For background, see Google Security Blog and security news like Forbes on Android vulnerabilities.

How Do These Platforms Stack Up?

| Feature | Aikido Security | Snyk DeepCode AI | Google Big Sleep |

|---|---|---|---|

| Automation Level | High (auto fix PRs) | Medium (fix suggestions) | High (autonomous zero-day hunting) |

| Accuracy | Good (manual fallbacks needed) | High precision static analysis | Experimental, variable AI heuristics |

| Integration Difficulty | Moderate (CI/CD pipelines) | Low (hooks and IDE plugins) | High (specialised fuzzing & compute) |

| Primary Use Case | Rapid patching of known vulnerabilities | Developer-centric code quality | Unknown vulnerability discovery |

| Compute Requirements | Low to medium | Low | High |

Far from replacements, these tools are comrades-in-arms. Use Aikido to turbocharge patch cycles, Snyk DeepCode AI to embed security in developers' daily work, and Google Big Sleep to chase shadows that traditional scanners can’t see.

True Tales From the Trenches: What I Learned

Onboarding AI security tools felt like handing a sledgehammer to someone who’d only wielded a butter knife. Initially, Aikido bombarded us with false positives, threatening to drown the dev team in pointless PRs. Human oversight was non-negotiable—switching off AI was tempting but fatal.

Daily standups emerged, laser-focused on analysing AI insights. This prevented “alert fatigue 2.0” and fostered a team-wide appreciation for the AI’s learning curve. The eventual payoff? A fivefold reduction in remediation times and even better, junior devs swapping dry lectures for hands-on secure code practices inspired by AI suggestions.

Bonus: automated audit trails from PRs made governance straightforward — no more wrestling with messy manual records.

What’s Next? Emerging Trends to Watch (Or Roll Your Eyes At)

- Generative AI Writing Auto-Patches: Soon enough, patches won’t just be suggested—they’ll be stitched in automatically. (Cue a 2-day panic when it misfires.)

- Adversarial AI Bots: Because nothing says fun like bots simulating attacks against your own codebase continuously in the pipeline.

- Autonomous Closed-Loop Security Agents: The dream (or nightmare) of AI that fixes vulnerabilities faster than you can blink.

Ethical pitfalls abound here: AI bias, over-trust, and attacks targeting the AI tools themselves. Vigilant governance, transparent AI outcomes, and continuous human training will be the saving grace.

Concrete Steps to Slash Your Vulnerability Exposure

- Pilot a Single AI Tool: Pick one tool (Aikido, Snyk DeepCode AI, or a fuzzing framework) and embed it into your CI/CD pipeline.

- Define Metrics to Track: Measure mean time to remediation, fix adoption rate, and false positive trends to quantify impact.

- Train Teams to Critically Interpret AI Results: AI is a weapon, not a crystal ball—trust but verify.

- Establish Regular Review Forums: Spend time understanding AI outputs collaboratively to avoid alert fatigue.

- Expand Gradually and Integrate: Layer in complementary solutions for environment management and AI-powered operational intelligence monitoring to reduce toil and increase vigilance.

Explore these complementary breakthroughs for enhanced developer experience and observability:

- Environment Management and Developer Productivity: How Bunnyshell, DX, and Appvia Wayfinder Revolutionise Developer Experience

- Data and Infrastructure Monitoring Reinvented: How Telmai, Better Stack, and Robusta Deliver AI-Powered Operational Intelligence for DevOps Teams

That’s it. If you’re still drowning in legacy scanner alerts and agonising over patching, it’s time to summon these AI warriors to your DevSecOps frontline. The era of dreadfully long remediation windows is fading fast.

Here’s hoping for fewer fires and definitely more sleep.

References

- Aikido Security. (2025). Automated Vulnerability Management. Retrieved from https://www.aikido.dev/blog/without-dependency-graph-blind-to-vulnerabilities

- Snyk. (2025). DeepCode AI for Code Security. https://snyk.io/product/code/

- Google Security Blog. (2025). Introducing Big Sleep: AI for Autonomous Vulnerability Discovery. https://security.googleblog.com

- Rapid7. (2025). Incident Response Findings Q2 2025. https://www.rapid7.com/blog/post/dr-rapid7-q2-2025-incident-response-findings/

- SecurityInfoWatch. (2025). Why Traditional Vulnerability Scoring Falls Short. https://www.securityinfowatch.com/cybersecurity/article/55316020/why-traditional-vulnerability-scoring-falls-short-in-2025

- Environment Management and Developer Productivity: How Bunnyshell, DX, and Appvia Wayfinder Revolutionise Developer Experience

- Data and Infrastructure Monitoring Reinvented: How Telmai, Better Stack, and Robusta Deliver AI-Powered Operational Intelligence for DevOps Teams

Code Snippets

1. Aikido AutoFix Integration

version: 2.1

jobs:

aikido_scan:

docker:

- image: aikido/security-cli:latest # Latest stable CLI image from Aikido

steps:

- checkout

- run:

name: Run Aikido vulnerability scan

command: aikido scan --repo . --output results.json

- run:

name: Auto-generate fix PRs

command: aikido autofix --results results.json --create-pr

# Ensure CI environment has proper permissions for PR creation

workflows:

version: 2

scan_and_fix:

jobs:

- aikido_scan

2. Snyk DeepCode Pre-commit Hook

#!/bin/sh

# Run Snyk code scan before commit, output JSON report

snyk code test --json > snyk_report.json || {

echo "Security issues detected. Refer to snyk_report.json"

exit 1 # Block commit upon security issues

}

3. Hypothetical Google Big Sleep Command

# Hypothetical; Big Sleep is proprietary and experimental

big_sleep --target chrome --duration 72h --output findings.json

This article was inspired by real incident reflections and emerging AI trends sourced from industry reports and tool documentation.