Red Hat Consulting GitLab Breach (Crimson Collective) — A Tactical Third-Party Risk Playbook for DevOps Teams

A Breach That Changed the Rules of Engagement

What if your most trusted consulting partner suddenly became the weakest link in your entire security chain? The 2025 Red Hat Consulting GitLab breach wasn’t just another headline — it was a seismic shockwave shaking the very foundations of how we perceive third-party risk in DevOps. 570 GB of sensitive data stolen, 28,000 repositories laid bare, and nearly 800 Customer Engagement Reports compromised. Sound like a disaster your team could survive unscathed? Think again.

This wasn’t your garden-variety leak of open-source code. Instead, it exposed intimate operational secrets: architectural diagrams, embedded credentials, infrastructure notes — secrets that no DevOps engineer wants published on the dark web. The breach ripped open the illusion that your partners’ pipelines are isolated fortresses. It’s a chilling wake-up call for every team complacent about their third-party security posture.

Let me share how this catastrophe unfolded, why it should terrify your third-party risk assumptions, and how you can learn from the scorched earth to build a fortress that actually stands.

1. Incident Overview: When Crimson Collective Pulled the Plug

October 2025 delivered one of the most alarming third-party security events in recent memory. Crimson Collective (also known as Eye of Providence), a notorious cybercrime group, breached Red Hat’s self-managed GitLab instance used by the Consulting team. Their boast? An astounding 570 GB of data including 28,000 repositories and 800 Customer Engagement Reports (CERs) packed with sensitive client details.

These CERs are far from mundane documents; they’re digital blueprints revealing client network topologies and deployment secrets, often littered with credentials silently lying in wait. Although Red Hat insisted no direct customer product or personal data was affected, the operational fallout could echo for years.

I vividly recall the moment I first read about the breach — a gut punch feeling. I’d seen similar leaks, but never one of this scale and subtlety. The attackers even leaked screenshots to flaunt their haul, a brazen move amplifying the chaos across DevOps security circles.

2. How Did This Happen? Dissecting the Attack Vector

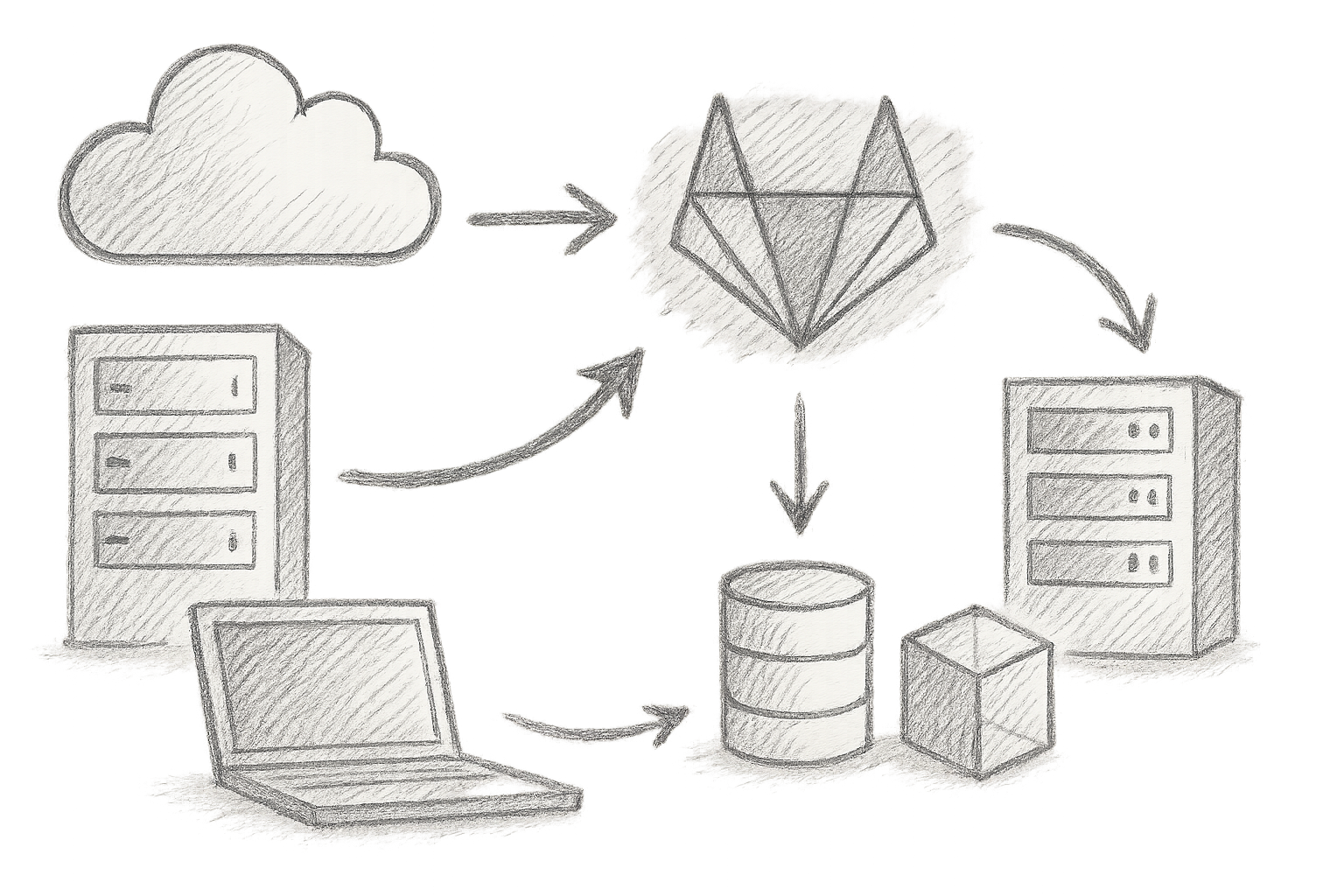

The Perils of Self-Managed GitLab

Running your own GitLab server can feel like wielding a double-edged sword — ultimate control, but complete accountability. Red Hat’s Consulting platform was self-hosted, meaning all security responsibilities rested on their shoulders.

The Crimson Collective exploited a cocktail of credential compromises and misconfigurations including:

- Leaked or stolen SSH keys and API tokens lurking unattended

- Lack of a rigorous least privilege access model across users and runners

- Absence of mandatory multi-factor authentication (MFA) for all critical access points

- Potentially exposed public-facing endpoints or misconfigured runners

Wait... Tokens and Secrets Within Repositories?

Here’s the “wait, what?” moment: how many DevOps teams realise their repositories still contain embedded secrets and tokens, just waiting to be found by a threat actor? This breach shines a light on how easily these can be weaponised once inside. Recent security research highlights that exposed credentials in code repositories remain one of the most overlooked attack vectors, often enabling lateral movement and privilege escalation.

Lateral Movement Like a Pro

Once inside, these cybercriminals didn’t just grab a few files — they reconnoitred everything: repositories, service accounts, tokens, pipeline configurations — using every available secret to extend their reach deeper into the environment. The stolen CERs effectively handed them a roadmap to client systems, possibly opening backdoors well beyond Red Hat’s immediate network.

Industry Experts Weigh In

- Red Hat confirmed no personal data exposure — a relief but somewhat hollow for DevOps teams aware that operational secrets are often more critical.

- Security researchers highlight how credentials embedded in code repositories remain dangerously overlooked attack vectors (Sirius Open Source - GitLab Problems and Risks).

- The breach is a brutal reminder: Code theft is petty theft; information leakage is a ticking time bomb (Verizon DBIR 2025).

3. The Frontline Defence: Rapid DevOps Playbook for Third-Party Credential Exposure

If you’ve ever been jolted awake at 3 AM by alerts signalling compromised credentials, you know the fight for control is primal and urgent. Having battled these fires myself, I’m sharing a war-tested playbook — ready to deploy before chaos reigns.

Step 1: Sniff Out and Revoke Compromised Credentials — Fast

Start by enumerating all tokens, SSH keys, and API secrets associated with third-party GitLab instances — automation is your best friend here.

Here’s a handy Bash script to list and revoke active GitLab personal access tokens via Admin API:

#!/bin/bash

# URL of your GitLab instance and an admin personal access token

GITLAB_URL="https://your.gitlab.instance"

ADMIN_TOKEN="your_admin_access_token"

# Fetch IDs of active tokens

tokens=$(curl --silent --header "PRIVATE-TOKEN: $ADMIN_TOKEN" "$GITLAB_URL/api/v4/personal_access_tokens?active=true" | jq -r '.[].id')

if [ -z "$tokens" ]; then

echo "No active tokens found or insufficient permissions."

exit 0

fi

for token_id in $tokens; do

echo "Revoking token: $token_id"

response=$(curl -s -o /dev/null -w "%{http_code}" -X DELETE --header "PRIVATE-TOKEN: $ADMIN_TOKEN" "$GITLAB_URL/api/v4/personal_access_tokens/$token_id")

if [ "$response" -eq 204 ]; then

echo "Successfully revoked token $token_id"

else

echo "Failed to revoke token $token_id (HTTP code: $response)"

fi

done

Heads-up: Always run this first in a staging environment. Admin API access is sensitive — never expose this token carelessly. Note the script now clearly handles HTTP errors and absence of tokens.

Step 2: Hunt Suspicious Activity in Logs Like a Bloodhound

No breach playbook is complete without forensic logging. Using tools like OpenTelemetry (OpenTelemetry Project) to correlate authentication failures and pipeline anomalies reveals attacker footprints.

For a quick primer, check GitLab runner logs for suspicious activity:

journalctl -u gitlab-runner.service | grep -Ei "unauthorised|failed|error" > suspicious_activities.log

You’ll be stunned at what "normal" looks like once you crank up alerting.

Step 3: Lock Down Access — Least Privilege is Not Optional

- Audit every user and runner permission: strip anything not absolutely mandatory.

- Segregate consulting activities from core infrastructure — because your “partner peace” is often just optimistic wishful thinking.

- Disable all legacy SSH keys and tokens that haven’t seen action in months.

Step 4: Patch Rapidly but Carefully

Following the breach, patching was paramount. Red Hat promptly applied critical GitLab CE security patches focusing on:

- Runner API access controls

- Webhook permission hardening

- Multi-factor authentication enforcement

Feel free to dive deep in this security patch breakdown.

4. Post-Breach Hardening: What We Did Differently Going Forward

If you assume default GitLab security settings are enough, you’re rolling the dice on a nasty outcome. Here’s what the battle-hardened teams did next — and you should too:

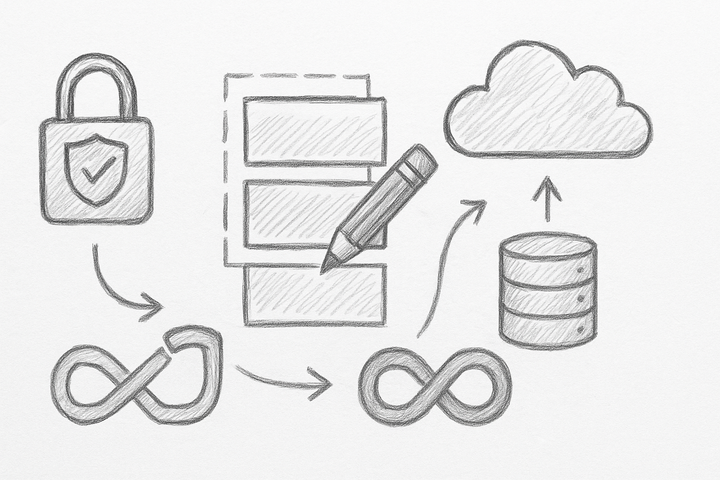

Demand MFA and Ephemeral Credentials

- MFA isn’t a “nice to have” — mandate it for everyone with privileged access.

- Swap long-lived static tokens for ephemeral credentials with short TTLs, swooping in to kill old tokens like a hawk.

Commit to Signed Commits & Image Signing

- Adopt tools like Cosign for cryptographic container image signatures.

- Enforce signed Git commits to stamp out unauthorised code injections.

- Use attestations to track build provenance — provenance paranoia pays off.

Lock Down GitLab Runner API Access

- Disable unnecessary runner features.

- Restrict runner registration to trusted nodes only.

- Maintain comprehensive audit logs of runner API activities for detective work later.

Automate Secrets Scanning in Pipelines

Integrate secret detection tools such as GitLab’s built-in secret detection or Trivy to catch accidental secret commits before disaster strikes.

5. The Never-Ending Battle: Validation and Continuous Improvement

If you think patching and hardening ends the story — congratulations, you’re the unwitting star of the next “wait, what?” security incident.

Continuous Monitoring with Anomaly Detection

Deploy lightweight endpoint monitoring tools like Sysmon on Windows and Osquery on Linux/Unix for detailed visibility:

osqueryi --json "SELECT * FROM processes WHERE name LIKE '%gitlab%';"

Watch for suspicious process chains or unexplained network traffic — the telltale signs of an ongoing intrusion.

Integrate Incident Response into Your SIEM

Feed your logs into a Security Information and Event Management solution for automated correlation, alerting, and rapid incident response.

Red Team Simulation Drills

Run realistic third-party breach exercises to uncover hidden gaps and sharpen your team’s incident handling skills. Remember: nobody enjoys red team exercises, but they’re adrenaline shots your security posture desperately needs.

Maintain Clear Incident Playbooks

Chaos is inevitable — clear, well-documented playbooks for supply chain and third-party breach scenarios ensure you respond swiftly and decisively.

6. The Hard Truth: Embracing Zero Trust

The Red Hat Consulting breach delivered a painful but invaluable lesson: traditional perimeter defences crack like cheap pottery once your trusted partners get a foot in the door.

Zero Trust isn’t a buzzword here, it’s your lifeline. Here’s the condensed reality check:

- No implicit trust — especially for third-party or consulting GitLab environments.

- Continuous verification using runtime attestation and supply chain integrity checks (SLSA Framework).

- Automated credential lifecycle management with ephemeral secrets and forced rotation.

- Real-time monitoring for configuration drift and access anomalies.

Wake up: any complacency here is handing adversaries your keys.

7. Looking Ahead: Innovations and Emerging Industry Trends

Stay ahead of tomorrow’s threats by embracing:

- SLSA (Supply-chain Levels for Software Artifacts) and in-toto frameworks to enforce end-to-end supply chain security.

- AI-powered credential management and secret detection tools that outpace human error.

- Community-driven real-time vulnerability sharing platforms accelerating collective response.

- Preparing for quantum-safe cryptography — the future-proof armour DevOps pipelines will soon need.

- Awareness of critical third-party surfaces, such as ingress controllers, highlighted by CVE-2025-59303 (HAProxy Kubernetes Ingress Controller breach).

8. Concrete Next Steps for DevOps Teams: Your Battle Plan

Immediate Actions

- Isolate and revoke all suspected compromised credentials using automated scripts.

- Conduct a ruthless audit enforcing least privilege on GitLab users and runners.

- Make MFA and ephemeral secrets mandatory across all sensitive access points.

- Patch and fortify CI/CD infrastructure focusing on runner API and webhook security.

- Build secrets scanning and continuous monitoring directly into pipelines.

Metrics to Measure Success

- Credential health index (active vs expired/revoked tokens)

- Audit coverage and alerting precision rates

- Incident response efficiency: mean time to detect (MTTD) and mean time to remediate (MTTR)

Recommended Tools Arsenal

- Open-source: Cosign, Trivy, Osquery, Sysmon, Ansible for automation.

- Commercial: GitLab Ultimate security features, PagerDuty AIOps, Vanta for compliance.

The Cultural Shakedown

Security isn't a tick-box exercise — it’s a relentless, empathetic daily commitment. Involve developers, operations, and leadership in the narrative, building resilience as a shared value. If your team treats security like a bad morning cup of tea, you’ve already lost.

Final Thoughts: A Playbook Worth Its Weight in Gold

I’ve been through more security breaches than I can count — and yet this one still stung. The Red Hat Consulting GitLab breach is a brutal, unvarnished truth: third-party risks are the hidden landmines beneath your DevOps feet. Trust no environment by default. Treat every third-party pipeline as a potential threat vector. Keep your defences razor sharp, your eyes wide open, and your rollback plans ready.

Bookmark this playbook like your sanity depends on it — because when the inevitable breach hits your pipeline, you’ll want to fight back fast, smart, and hard.

References

- Red Hat GitLab breach: what customers should know — FindArticles (Oct 2025)

- Red Hat confirms major data breach after hackers claim mega haul — MSN (Oct 2025)

- GitLab Problems and Risks — Sirius Open Source (2025)

- Cosign GitHub Repository

- Verizon Data Breach Investigations Report 2025

- OpenTelemetry Project

- SLSA Framework

- Osquery Project

Internal Cross-Links

- Locking Down GitLab 18.5.1/18.4.3 Security Patches: Mastering Runner API Access Controls and Rock-Solid Upgrade Protocols

- CVE-2025-59303 in HAProxy Kubernetes Ingress Controller — Secret Exposure and How to Lock Down

Disclaimer: This article is based on publicly available information as of late 2025 and my personal experience navigating production incidents and DevOps security battles.