Revolutionising Disaster Recovery: Harnessing Automated Testing Frameworks for Unmatched Resilience

At 2 AM on a Tuesday, my phone erupted in a symphony of alerts. A critical database that our team had spent months fortifying was down. Panic surged through me as I recalled my previous experiences with disaster recovery (DR)—all the moments I wished I had better tested our plans. In the frantic hours that followed, I realised there was an undeniable truth: without robust, automated testing frameworks, our resilience would always hang by a thread.

The Imperative of Disaster Recovery in Modern Operations

In today's fast-paced digital landscape, the cost of downtime can be catastrophic. I’ve witnessed incidents where businesses lost thousands in revenue as well as customer trust due to failing disaster recovery strategies. Common pitfalls include reliance on outdated DR plans and the lack of routine testing. After a significant incident, one colleague discovered that their DR plan hadn't been updated in over a year. They had no idea that their backups were corrupt until it was too late. To prevent such incidents, understanding effective database load balancing strategies in cloud environments can provide crucial insights into managing resources during failures.

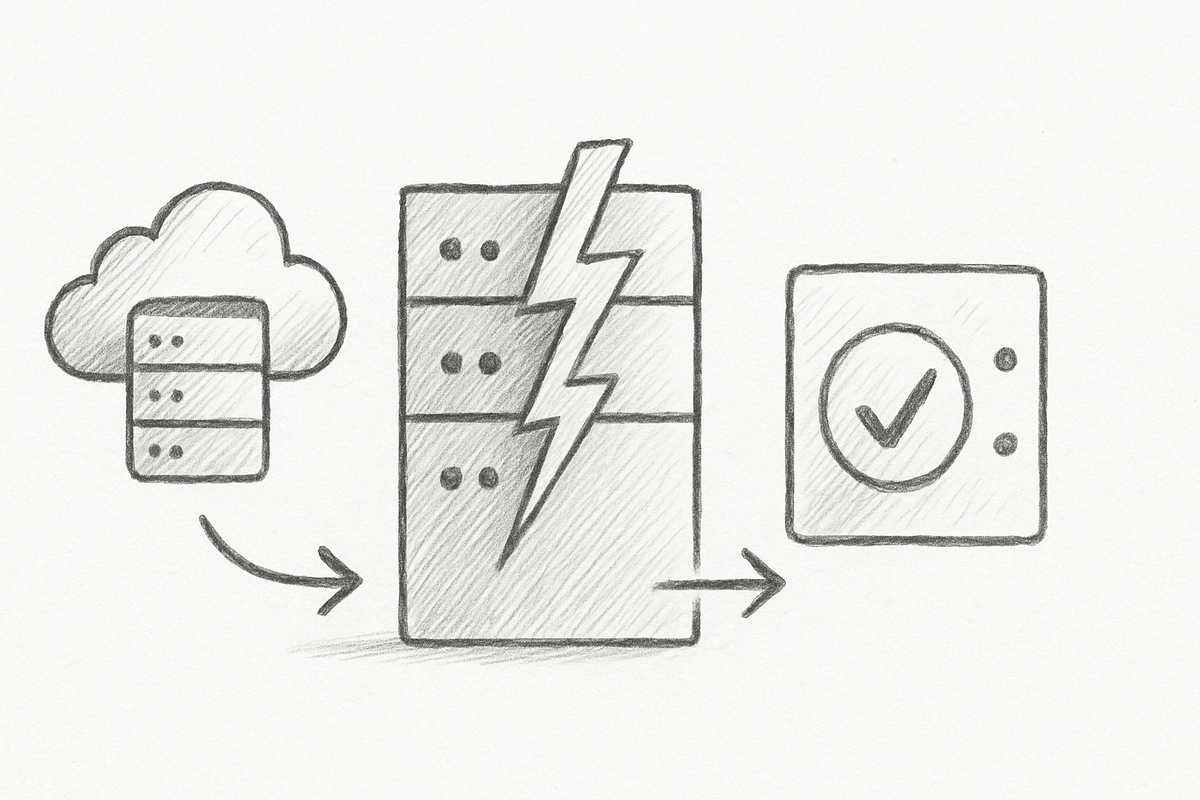

Understanding Automated Testing Frameworks

Automated testing frameworks are vital for continuously validating DR procedures, ranging from unit tests to system integration testing. Their primary benefits include:

- Immediate validation of DR processes

- Continuous performance testing under various conditions

- Enabling teams to simulate real failure scenarios without impacting live environments

For example, using a chaos engineering framework, I could intentionally introduce failures into the system to verify that our recovery processes were effective. To enhance your understanding of optimising security while utilising these methods, read about optimising container security with Dragonfly v2.3.0.

Identifying Your Disaster Recovery Needs

Determining the specific needs of your organisation is critical. Here’s how I approached it:

- Assess Data Dependencies: I reviewed our applications and prioritised them based on criticality.

- Set Recovery Objectives: Clearly defining our Recovery Time Objective (RTO) and Recovery Point Objective (RPO) helped set our DR plan's success criteria. RTO is how fast we must restore the system, and RPO is the acceptable amount of data loss measured in time. These targets are essential for prioritising resources and planning.

- Establish Metrics for Testing: Metrics such as the time taken to restore service and data accuracy post-recovery became our benchmarks.

Integrating Automated Testing into Your Disaster Recovery Plan

Implementing automated testing can feel overwhelming. Here’s the step-by-step guide I followed:

Selecting the Right Tools

Choose tools that fit your infrastructure. I opted for:

- Chaos Engineering Tools such as Gremlin or Chaos Monkey to automate failure injections.

- CI/CD Pipelines where I integrated testing scripts to ensure DR procedures triggered automatically. For a deeper look at integrating these tools, check out enhancing security posture with automated compliance in CI/CD.

Scripting Automated Recovery Tests

Using a tool like Terraform, I created scripts simulating a complete environment failover. Here’s a simplified AWS example:

resource "aws_instance" "failover" {

ami = "ami-123456"

instance_type = "t2.micro"

lifecycle {

create_before_destroy = true

}

}

output "failover_ip" {

value = aws_instance.failover.public_ip

}

These scripts are tested regularly in a staging environment to minimise risks.

Scheduling Regular Tests

I implemented a testing schedule using cron jobs to ensure our recovery mechanisms would be tested quarterly. Each test was reported automatically to our Slack channel, ensuring visibility across the team.

Real-world Examples of Successful Integration

After integrating automated testing, we reduced our average recovery time from over 6 hours to just under 30 minutes. One major incident validating this change was an unexpected outage during peak traffic hours—the automated tests allowed us to re-route traffic efficiently and restore services within our defined RTO. To further explore how automation impacts overall DevOps practices, refer to integrating AI tools into your DevOps workflow.

Continuous Update and Improvement Process

Setting up a feedback loop is key. I encouraged teams to regularly discuss test outcomes during retrospectives. One DR simulation revealed a weak point in our data replication strategy, which we promptly addressed, leading to a stronger architecture.

Measuring Success: Metrics and KPIs for DR Testing

Key performance indicators like Mean Time to Recovery (MTTR) and success rates of automated tests provide tangible metrics for evaluating effectiveness. Using tools such as New Relic or CloudWatch allowed me to gather data and adjust our tests accordingly.

Future Innovations in Disaster Recovery Testing

As the field evolves, integrating AI into DR testing could offer predictive capabilities, allowing us to foresee potential weaknesses before failures occur. Machine learning could analyse incident patterns to refine the DR plans continuously.

Conclusion: Taking Action for a Resilient Future

With the stakes higher than ever, investing in automated testing frameworks for disaster recovery is no longer optional; it’s imperative. By taking proactive steps toward an automated future, we empower ourselves to withstand disruptions confidently. It’s time to shift our culture toward resilience and adaptability—a change worth every effort.